AI Startups are just big tech's low risk R&D department now

Large tech companies have significantly scaled back their direct investment in product and engineering talent. Instead they are leveraging their capital and monopoly power to indirectly corner the market in emerging opportunities, funding startups and extracting their talent and technologies, leveraging their market power to ensure success.

Amazon hires from AI robotics startup Covariant, licenses technology

Amazon is hiring Pieter Abbeel, Peter Chen, and Rocky Duan and licensing Covariant’s robotic foundation models to advance the state-of-the-art in intelligent and safe robots.

Amazon acquires AI startup one employee at a time to avoid regulatory scrutiny

Regulators probably wouldn’t let Amazon acquire an AI enterprise startup, so they just hired everyone who ran it.

The Generative AI Startups That May Look for a Buyer

Google’s complex deal to hire the founders and other researchers of Character.AI—the third such deal between a tech giant and an artificial intelligence startup in the past six months—raises a question: Which startup might be next? Startups that collectively raised billions to develop their own…

This is by far not the first acqui-hire of a notable AI company by a large tech company in the last 18 months but follows a string of similar by other hyperscalers. It appears clear now that it is part of a new strategy by large tech companies to continue performing M&A despite regulatory scrutiny

Instead of acquiring the company they acquire founder and key talent and license the company’s techology and IP or merely access to it. This effectively circumvents existing anti-trust review processes and drains the startup’s core into it’s new owner without assuming any liabilites or responsibilites.

Winners: Big Tech. Losers: Early Employees, customers and the market.

This new type of M&A is a win for large tech companies and few others.

Early employees who joined the startup with hopes of an eventual exit are left little to show for their efforts.

/cdn.vox-cdn.com/uploads/chorus_asset/file/25513827/1445795057.jpg)

How Big Tech is swallowing the AI industry

Amazon and Microsoft have shown how Big Tech is going to consolidate the AI industry one way or another.

Alexandra Miller, an Amazon spokesperson, told The Verge that the company had hired “close to” to 66 percent of Adept’s employees. In an internal memo published by GeekWire’s Taylor Soper, SVP Rohit Prasad said that, like Microsoft with Inflection, Amazon will also be licensing Adept’s technology to “accelerate our roadmap for building digital agents that can automate software workflows.”

Early customers also lose out in this scenario as the acqui-hired company can’t transfer them to the new owner, leaving the products and services they have come to rely on to wither and die.

Inflection AI will limit its free chatbot called Pi as it pivots to the enterprise

CEO Mustafa Suleyman left Inflection for Microsoft in March.

Big Tech: More equal than other investors

Another constituency hurt in this scenario are other investors are unceremoniously squeezed out of the picture.

Building AI is a capital intensive business. For many AI startups, investment from large tech companies is an initial blessing, but comes with strings attached, usually lock into the funding hyperscaler’s compute architecture in form of compute credits and platform committment (e.g. to Azure, Bedrock, GCP, etc) as part of the investment deal (for example seen with OpenAI and Microsoft, where much of the original funding deal is valued in compute credits).

Why AI Infrastructure Startups Are Insanely Hard to Build

Level of difficulty: insane

This creates a power structure vastly in favor of the hyperscaler as compute costs are accounting for the majority of costs of AI startups and the hyperscaler has control over the value of compute credits and benefits directly from the operational costs of the startup even after the compute credits run out, all while being able to pass the compute spend as sign of business demand.

Due to the structure of these new deals, M&A provisions in the funding contract are not triggered and there’s very little recourse for other investors (although we can expect a hurried change of provisions in future funding contracts).

Example: OpenAI

OpenAI is a good example of many of these dynamics at play. Microsoft invested into the company after seeing their disruptive product through a heavy compute credit deal and the company came within a hair of being gutted for talent by Microsoft during their board troubles earlier in 2024.

The current conversation about the latest funding round for OpenAI needs to be seen in context of these developments. Interest from all major AI hypescalers can be explained not only by OpenAI’s critical role in keeping the AI narrative alive and well, which undoubtedly would be at risk should the company fall on hard times, but also by the strategic move to ensure observability and equal access to potential break through technology.

/cdn.vox-cdn.com/uploads/chorus_asset/file/25462007/STK155_OPEN_AI_CVirginia_C.jpg)

Apple and Nvidia may invest in OpenAI

OpenAI might be raising a bunch of money soon.

The market and regulators have started to take notice of the pattern

Opinion | Big Tech’s A.I. Takeover and the Slow Death of Silicon Valley Innovation

The leading companies are co-opting Silicon Valley’s traditional cycle of disruption.

US Probes Microsoft’s Deal With AI Firm Inflection, WSJ Says

US regulators are investigating whether a deal Microsoft Corp. struck with AI startup Inflection may have been structured to avoid scrutiny, the Wall Street Journal reported, citing a person familiar with the matter.

but serious intervention is not in sight anytime soon.

Startups: The budget R&D department for AI Tech

It’s no secret that large tech companies have significantly scaled back their direct investment in product and engineering talent. Competition, for much of the last decade in tech, outside of M&A was fought out in tech by rapidly deploying product teams to front-run any competitor on emerging opportunities.

It’s also not secret that much of AI is lagging behind expected adoption in the market due to a lack of product investments. If anything, Anthrophic’s innovation on Claude, Artifacts, shows just how much room there is for product innovation in AI. But hyperscalers currently, and probably correctly, reason that investing in product is secondary to investing the infrastructure to power product innovation, in part because they can just as easily acquire that product innovation for a fraction of the cost later.

As a result, Silicon Valley employment of previously coveted engineering and product talent has dropped significantly, driven by layoffs from all the large hyperscalers as GPUs have replaced engineering talent as the monopolizable investment of opportunity.

Open Source as the primer

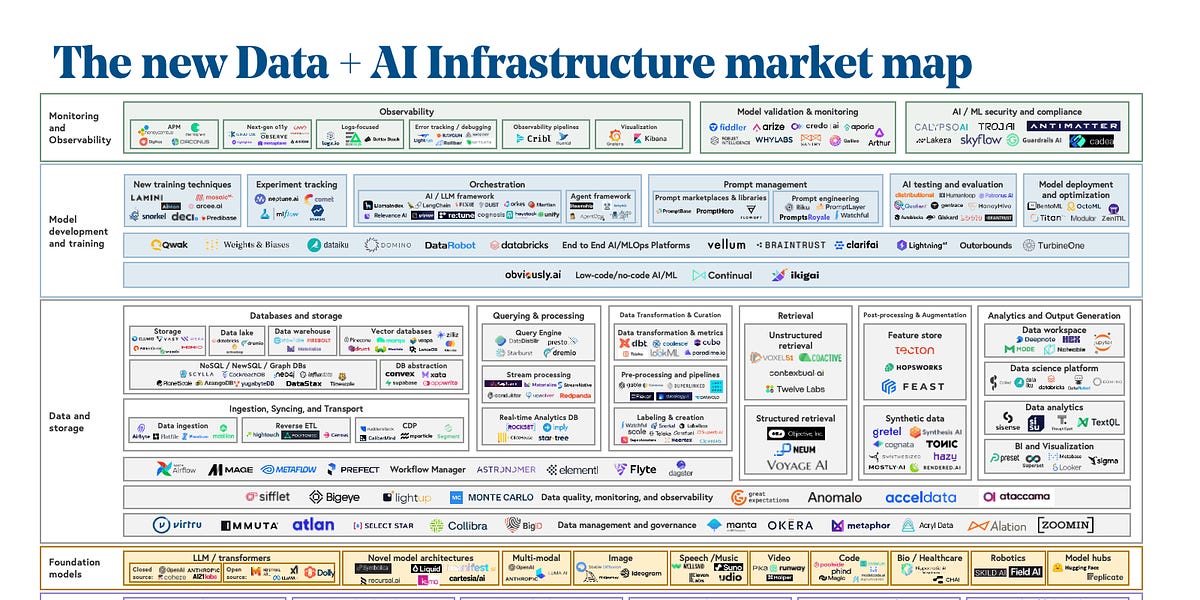

By open sourcing the basic tools for AI, hyperscalers have enabled startups to build products in the field and used it to replace internal teams as the primary source of product R&D. In the wide open field of possibilities for AI usecases, it represents a carpet bombing approach to innovation - give away the basic tools for free and let startups do the R&D at marginal cost to the hyperscaler.

Leveraging the compute platforms for observability to identify growing demand startups and with limited strategic investment for early observability, the hyperscaler can identify exactly when to swoop in and pick up the validated growth opportunities at no risk, all while getting positive PR for association with “open source”, using acqui-hire as a regulatory foil.

The tech landlords hold all the cards

Even before AI, tech companies managed to establish themselves as powerful landlords and gatekeepers of business in the digital economy. Even Apple would not have been able to succeed as a company if it had been subject to it’s own 30% platform fee, reliant on massive advertisement spend to Google and Facebook to find customers or subject to it’s own practice of “sherlocking”.

Opinion: The predatory landlords of the platform economy

Platform landlords are increasingly controlling the digital economy, allocating value to themselves. AI is going to make it worse argues Georg Zoeller

With AI, things are significantly worse:

-

Foundation models business has quickly converged on a Big Tech only playground. A model like Google Gemini costs USD 250M for training alone and enters a market with little competitive edge and vicious price dumping at the hands of Meta. Data and access to data for training and finetuning is quickly becoming limiting and increasingly costly factor massively favoring large tech companies with existing platforms and the leverage to force access to data.

-

Building downstream on proprietary Foundation Models is economic suicide. In a business stack that starts with USD 40.000 GPUs (market up by an astonishing 10-15x), runs through compute hyperscalers (3-5x markup) and requires an eventual satisfaction of OpenAI and Anthropic VCs (10x in 10 years), ChatGPT in a trenchcoat offers little moats. In fact, the rapid eroison of technical moats due to the rise of code generation is actively reducing any technical moats.

-

Even when taking advantage of “Open Source” models, in addition to traditional infrastructure-spend such as bandwidth and storage, inference can account for the majority of an AI startups capital expenses, requiring massive capital infusions few investors are willing to provide. Big Tech stands ready to spend money to make money (“Nvidia invests into Cohere so Cohere has the money to buy more Nvidia cards” is a common enough story at this point), but with it gets the strings in place to guarantee it won’t be disrupted.

-

The frontier nature of the ecosystem with it’s rapid progress due to decentralized research, Open Source and weekly disruptive discoveries produces a vastly heightened risk profile compared to the previous decades of technology. Open Source in fact insulates technology companies from disruption by trading the opportunity to build proprietary technological moats for disruption immunity by preventing anyone else (including startups) from building proprietary value on the technology.

Given these dynamics, it’s a brutally effective monopoly strategy executed on top of a natural infrastructure and market monopoly that will be impossible to overcome for any new entrants without regulatory intervention.

We should not expect the emergence of any kind of disruptor from AI startups at this point challenging big tech and OpenAI is the best example of for it.

Lessons for Investors and Governments

Investors need to understand the situation carefully before injecting more money into the market.

Currently, Goverments employing a “tech business as usual” investment strategy, are pouring billions in subsidies, incentive schemes and direct investments into AI intiatives, startups and AI adoption by companies, hoping to create jobs, wealth and spur technology adoption. That is unlikely to play out.

Even when programs are not directly pushing money and “active user metrics” into Big Tech Companies (such as Singapore IMDA’s Microsoft CoPilot subsidy - a bit of a mystery given CoPilot’s lackluster success in real world applications at this point), the structure of the ecosystem ensures that the primary beneficiary of these subsidies will be familiar hyperscalers. And with this novel M&A strategy employed by Silicon Valley, the chances of successful local exits have essentially moved to zero.

Microsoft collaborates with Enterprise Singapore, AI Singapore, and the Infocomm Media Development Authority to accelerate AI transformation for SMEs with AI Pinnacle Program

Left to right: Mr. Kiren Kumar, Deputy Chief Executive, Infocomm Media Development Authority; Mr. Laurence Liew, Director, AI Innovation, AI Singapore; Ms. Andrea Della Mattea, President, Microsoft ASEAN; Mr. Gan Kim Yong, Deputy Prime Minister and Minister for Trade and Industry; Ms. Lee Hui Li, Managing Director, Microsoft Singapore; Mr. Soh Leng Wan, Assistant Managing Director (Manufacturing), Enterprise Singapore

More urgently than injecting money into large tech companies, Governments should invest into deeply understanding the structure and incentive system of the market and identify the necessary interventions required to make AI a net positive for their constituency.

While understandable, the FOMO spending triggered by the much talked about “AI Race” currently propelling Nvidia stock to record high will likely be seen as a mistake in hindsight. If OpenAI’s case is any indication, in a rapidly developing field the advantage lies with the second movers (Meta and Apple in this case).

To avoid outcomes like during the crypto boom, the order of the day has to be “understand deeply, then move” when it comes to technology investments.

Lessons from history

Given the highly concentrated nature of compute infrastructure ownership, it is worthwhile to review history from the age of electrical infrastructure.

To drive electrical infrastructure buildup and standardization throughout the United States, the government supported the carve-up of the country to avoid competition - leading to a decades long struggle of finding the right balance of infrastructure investment, competition and regulation with reigning in corruption and monpoly abuse a constant struggle.

Compute, due to it’s heavily infrastructure like nature, shares many similarities. It seems obvious that a significant regulatory oversight will have to be put in place to prevent the abuse of monopoly power by owners of essential infrastructure, especially given hyperscalers long track record of leveraging their own marketplaces to crush other participants.

About the Author

Georg Zoeller is a Co-founder of Centre for AI Leadership and a former Facebook/Meta Business Engineering Director. He has decades of experience working on the frontlines of technology and was instrumental in building and evolving Facebook’s Partner Engineering and Solution Architecture functions.