Report: The AI Assisted Coding Landscape in October 2024

A deep dive into available tools and relevant considerations for their use in the workplace.

A Phase of Rapid Progress

As discussed previously, there is some indication that generative AI tooling can significantly increase developer productivity. In a field desperate to find real world usecases with product market fit, localized areas of real productivity gains, such as coding, quickly become crowded, with new tools, state of the art models and competitors emerging on a daily basis.

This “Cambrian Explosion” of AI SWE tools is giving birth to an ecosystem of rapidly increasing complexity and creates major challenges for most companies looking to adopt the technology:

- Mapping SOTA capabilities and staying on top of the rapidly evolving landscape,

- Establishing background knowledge about the nuances and tradeoffs between “open source” and “proprietary” models.

- Approximating and comparing costs and benefits across different tools with wildly varying business models.

- Understanding, assessing and mitigating data governance, intellectual property and security risks.

- Performing due dilligence, vendor onboarding.

- Completing pilot deployment, overcoming technical challenges and delivering employee training.

all before the technology landscape has changed completely again in approximately 6 months. Ouch indeed 😣.

In this report, we will be discussing these challenges in more detail before providing a snapshot of the current state of the art in AI coding tools and our recommendations for companies looking to adopt these technologies.

Welcome to a rapidly evolving, increasingly complex and completely unsustainable ecosystem!

Staying on top of rapid progress

With hundreds of billions of dollars of private investment propelling research and product development forward, establishing “what is possile generative with AI today” has been a constant challenge even for experts for the last 24 months. The same money has also driven unprecedented hype, the proliferation of snakeoil tools and misleading marketing narratives, obfuscating the actual state of the art.

Reminder Centre for AI Leadership Members can take advantage of the curated Code Generation Channel to keep their key stakeholders informed about key developments in the space.

Establishing which model is “the best” at any given time is impossible. Benchmarks are unreliable and trivially manipulated, and almost every vendor is claiming “best in class” performance for their model at any given time. While we currently consider claude-3.5-sonnet the strongest generalist coding model model, this is a static assessment and ultimately, the only way to find out if a model is a good fit for a company, codebase and use-case is to perform due dilligence in a pilot deployment.

Capability does not equal productivity, yet.

As developers are increasingly finding out, new capabilities do not necessarily translate into the desired outcomes a company is looking for: productivity gains. Products like Microsoft Co-Pilot, advertised as productivity boosters for the average employee, are not yet living up to expectations, and, in fact, are increasingly reported as distraction and productivity drain.

Mixed signals on AI coding tools

The early feedback for Coding Assistants is similarly mixed. While many developers are self reporting significant productivity gain and large tech companies like Amazon are raving about productivity gains, company wide surveys on some of the earliest adopters only show modest improvements, if any.

Devs gaining little (if anything) from AI coding assistants

Code analysis firm sees no major benefits from AI dev tool when measuring key programming metrics, though others report incremental gains from coding copilots with emphasis on code review.

Where developers feel AI coding tools are working—and where they’re missing the mark

Our annual survey of more than 65,000 developers captures a portrait of the global developer community: who you are, how you learn, what kind of job you have, the tools and technologies you use, and more. We previously summarized some of the results in this blog post, but we wanted to dive deeper into certain insights the survey uncovered.

Andy Jassy on LinkedIn: One of the most tedious (but critical tasks) for software development… | 159 comments

One of the most tedious (but critical tasks) for software development teams is updating foundational software. It’s not new feature work, and it doesn’t feel… | 159 comments on LinkedIn

Given the rapid technical advancement in the field however, it is not clear how representative surveys on a year old technology (like the original GitHub Copilot) are for the current state of the art. The only way to find out, for most companies, is to explore the technology in a pilot deployment.

Progress is furious, and gains are not exhausted by far.

Progress on Code Tooling is furious, in part because of the many low-hanging fruit improving integration with development environments, IDEs and developer workflows. The state of the art has moved from increasingly sophisticated auto-complete and code suggestions in 2022 to reliably performing high volume tasks tasks like syntheszing React frontend components in 2023 to being able to plan, create, document and deploy moderately complex framework applications adhering to best practices, heralding a near future where many currently manual development tasks will become increasingly automated.

Hands on experience with the technology is critical!

We strongly recommend technical decisionmakers to spend the five minutes it takes to test out Bolt to understand the massive leaps in capabilities these tools have made in the last 6 months, as well as their limitations

A simple prompt like:

Please create a internal skill and mentorship platform using a Astro/ShadCn/TailwindCss stack, allowing our employees to advertose their skills and skill level for key business and technical skills, flag themselves as experts anda available mentors and listing the company L&D catalog. Make it modern, sleek and responsive, support light/dark mode toggle via tailwinds built in classes (text-background, etc) and use appealing placeholder imagery where possible..”

will create a mostly functional astro framework application and deploy it online in minutes.

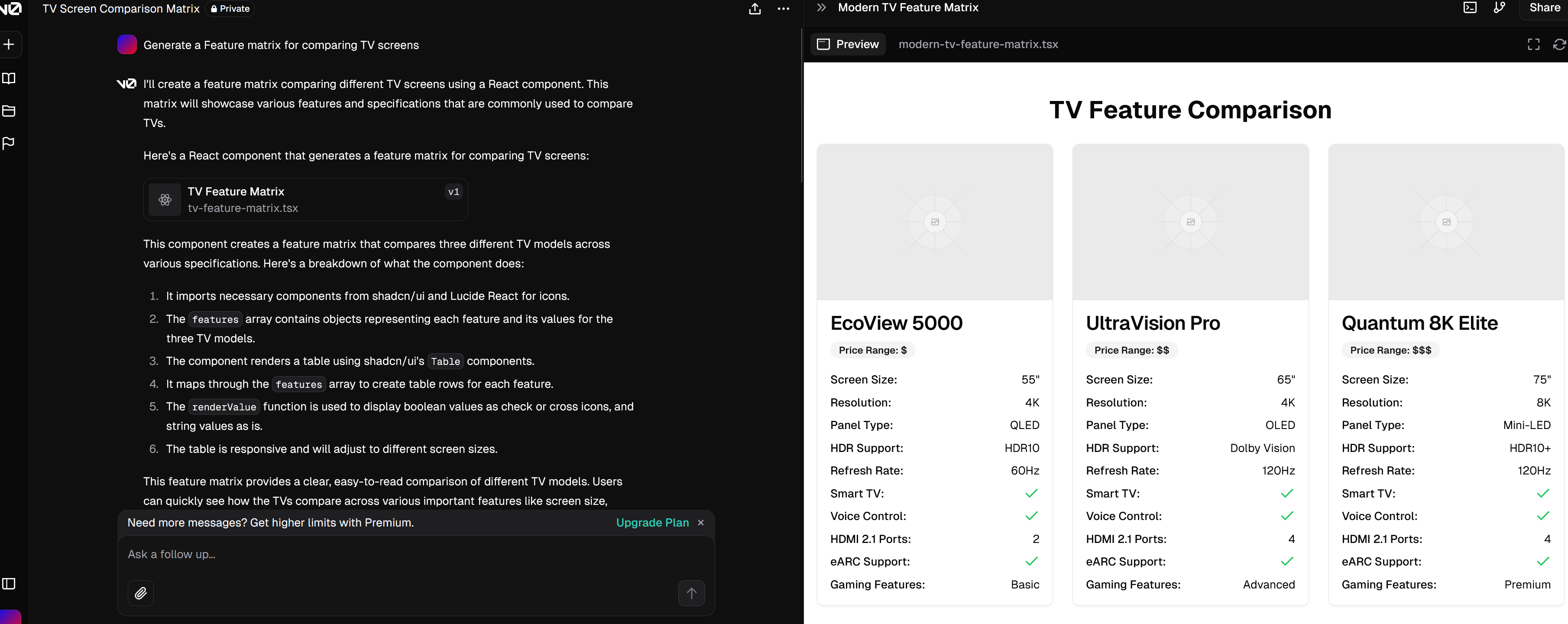

Example: StackBlitz’s Bolt synthesizes entire React applications with one-click deployment in minutes, while providing a fully integrated development environment.

Overall Code Generation model performance is a part of the puzzle, but it is not the constraining factor for futher gains. Top shelf models like Claude-Sonnet-3.5, when instructed correctly, already produce highly accurate, quality code with fewer and fewer hallucinations each iteration and context window size has increased far enough to allow inference taking into account large parts of the codebase.

Instead, developer experience, workflow integration and codebase maintenance are increasingly moving into focus as the limiting factor productivity gains and we can expect rapid improvements in this area. Going forward we can expect competition among model providers to shift from increasingly converging model performance to developer tooling - a trend kicked off by Claude’s Artifacts and now furiously accelerated by startups like Bolt, Cursor and Tabnine. Big Tech, while having one leg in the game, can be expected to hold back with product investments, letting startups lead the way to finding product market fit and derisking innovation - after all, they can always acqui-hire or crush startups using their compute, model, data and platform supremacy.

Decoding Open Source Misconceptions

With the AI landscape bifurcated between “open source” and “proprietary” models, it is important to understand the nuances and tradeoffs between the two.

A commonly cited benefit of open source (or rather open weights) models is that they are under control of the company/user and therefore not subject to sudden business continuity risks, such as the shutdown or sale of a third party provider, trade/sanctions risks and dependency on specific providers.

Unfortunately, all leading “open source” code models are not actually open source: While their weights may be published, the data used to train them is not. This means that the model can not be reproduced by the user and it’s long term existence is subject to the whims of the provider and their ability to retrain the model. Data, in other words, is a clear opportunity for building moats, and the highly centralized nature of software development around GitHub poses an increasing risk for many third party providers: It is unlikely that Microsoft will allow scraping of GitHub data at scale by the competition in the long run.

For coding models specifically, “keeping the model” is a misconception: Due to the rapidly evolving nature of the software engineering ecosystem, coding models depreciate in value at a rate of months, not years. As common libraries, interfaces and APIs change over time, even open source models have to be constantly retrained to stay up to date and relevant or quickly start producing increasingly irrelevant, faulty results. A several months old model is likely to be unusuable for modern software development - a problem currently obscured by the rapid release of new models into the market.

Exactly this stream of new models however is unlikely to persist in the long run: The price point of many open models at “free” is completely unsustainable in the light of constant data acquisition and retraining costs. While a large number of providers currently are providing open models to acquire users and woo investors, it is clear that this is a short term phenomenon: Over time, the increasing costs of retraining and compute are entirely unsustainable (see further discusson on costs below). Will companies like Meta, Microsoft and Alibaba continue to provide free, subsidized models to developers once the competition has been starved out of the market? Unlikely.

For a much deeper discussion of the open source vs proprietary situation, we recommend Michael Baek’s excellent article for techpolicy.press:

Unmasking Big Tech’s AI Policy Playbook: A Warning to Global South Policymakers

Michael L. Bąk explains how companies are using fear of regulation to distract policymakers from steering AI development toward equitable, inclusive outcomes.

Establishing cost in a complex and unsustainable landscape.

Before selecting and adopting AI coding tools across an organisation, it is important to establish short and long term cost, compare offerings and validate long term business viability. This however is a non-trivial task in the current landscape.

Current pricing models are not sustainable

Early offerings, such as GitHub CoPilot and ChatGPT Plus use flat rate instead of usage based pricing, significantly reducing risk and accounting burden for companies and users. But while inference costs have been driven down by optimisation breakthroughs and better hardware by two orders of magnitude over the last two years, the cost of training (and retraining) models keeps creeping up and projected to continue to do so. This has turned most of these early offerings into loss leaders: As of 12 months ago, Microsoft reported a loss of USD 20 on every user of GitHub Copilot’s USD10/mo subscription, with OpenAI incurring similar losses per ChatGPT Plus subscription and running a multi-billion dollar deficit.

Overall AI products incur significantly higher per user expenses compared to traditional SaaS software products, requiring usage based pricing to become long term sustainable. The current flat-rate pricing model is great for overcoming customer hesitance (compared to complex per token pricing), but will have to change going forward.

Self cannibalization and long term viability

When the technology works and increases company efficiency, the likely outcome for many providers like Microsoft is self-cannibalization: If clients require fewer developers, Microsoft’s per seat revenue for both AI products like CoPilot and key revenue drivers such as Office, Teams and Windows will invariably decrease. Therefore, it is a given that the cost of AI products will have to increase over time to compensate. This, of course, is an entirely familiar situation. Most scaled technology businesses are initially subsidized to gain users and market share, then transition to profitability by increasing prices as they grow and users are locked into a consolidated ecosystem. Nobody is riding Grab for SGD5 to Changi Airport anymore, and companies who may have “realized” productivity gains from AI tools through layoffs may find themselves in a dependency trap long term.

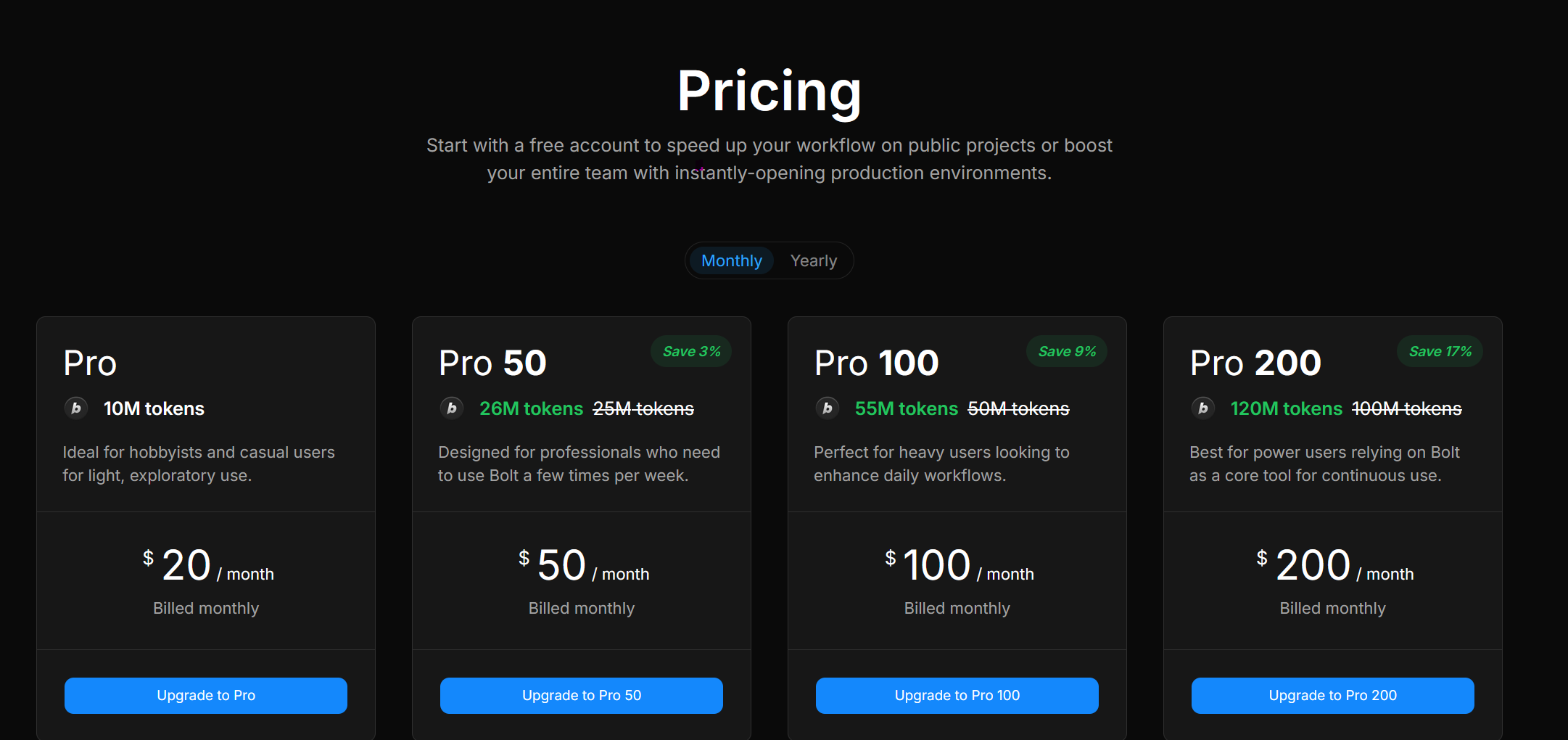

Trend to complex premium pricing

For the aforementioned reasons, the current trend across providers is towards hybrid, usage-based pricing schemes and enterprise level plans, ranging from pure “total token” based pricing to to more complex “input/output” based pricing, “hidden tokens” (o1 model) or even “messages per day” (Claude, ChatGPT). Both Claude and ChatGPT offer premium priced team and enterprise plans well in excess of the standard 20$/month consumer subscriptions.

Example: Bolt charges for total tokens per month, something impossible to predict in advance for most people.

With, depending on context, highly variable token consumption, it is difficult to impossible for developers to predict their token consumption in advance, especially with more “autonmous” models like o1 and highly automated IDEs like Bolt. It is not uncommon for a developer to burn through their entire token budget for the month in a handful of days, especially when using the more advanced models, creating uncomfortable memories for corporate IT departments burned by exploding cloud bills in the past.

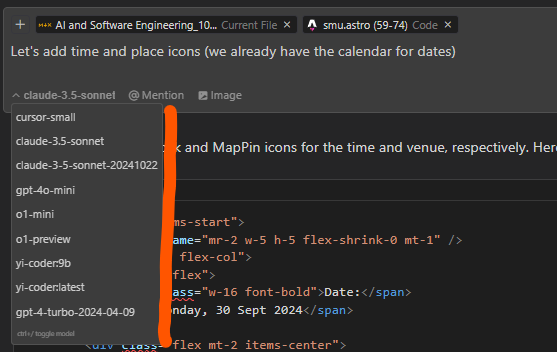

Example: Cursor IDE allows users to select between different models, each with their own pricing model and cost structure depending on context size.

Overall, the lack of standardized pricing methodologies across providers, hard to anticipate operational cost and extremely limited billing and auditing transparency in most tools creates serious logistical challenges for companies looking to adopt AI coding tools across their organizations.

Expect Consolidation

We believe the current AI Coding Tool Goldrush will run it’s course over the next 6-10 month before giving way to brutal consolidation. Most startups will not be able to compete with large tech providers in the long run and will either be acquired or fail. Chances are that Visual Studio Code will remain the dominant development environment. Not all products are doomed however. Some, for example Stackblitz’s Bolt, are complementary to their parent company’s existing products - for example by funneling users into profitable cloud hosting services via one-click deployment and providing their developers with a solid business model.

Data Governance and Egress Risks

Data egress is a significant challenge for AI coding tools. Understanding if, when and to where data exits the user’s computer and the company network to third party providers is anything but trivial and more often than not, the user is scarsely aware of the implications.

Since the most powerful coding models are cloud proprietary, or require significant compute infrastructure to run, the default assumption should be that any use of these tools will result in data being sent to third party providers.

While some solutions exist to run inference on the user’s device or company premises, the vast majority of tools are not built with this scenario in mind and will result in data being sent to the cloud. Some providers like TabNine or Codium provide assurances that data to their models is handled ephemeral is not processed beyond LLM inference in their SOC disclosures.

Opportunity for unwanted and potentially uncontrollable data egress increases significantly when tools integrate deeper into the development environments. Tools such as Visual Studio Code Plugins or forks such as Cursor, often communicate with multiple third party providers at once as part of their operation, each with their own policies and practices for data handling, training, intellectual property rights, manging opt-out, retention and security.

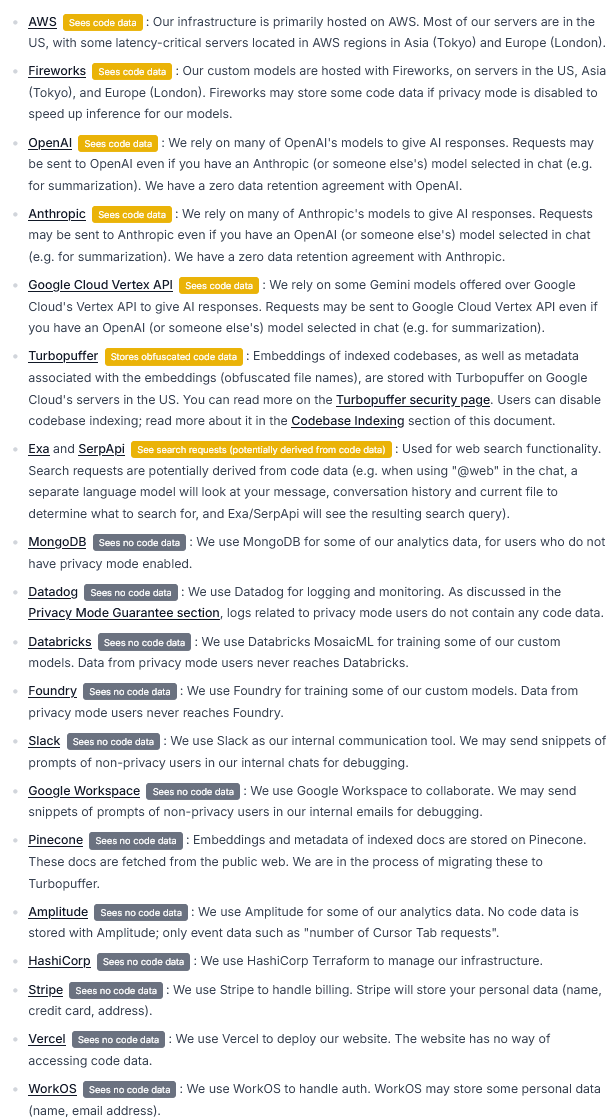

Just how complex can AI infrastructure and data-flow behind modern AI coding tools be? Here’s an example data flow and storage disclosure sheet (from Cursor IDE):

The data egress involved in AI tooling are significant and multiy layered:

-

Leakage of critical, security relevant data such as environment variables, database connect strings, API keys and CI pipelines outside company confines.

-

Leakage of stack traces, error messages and internal logs potentially including sensitive, protected information such as PII, protected health information (PHI) and other regulated data.

-

Loss of propietary codebase, product development plans, and developer information to third parties and potentially into the training data data of future LLMs.

Legal questions around potential copyright violations through code models and the ability to copyright AI generated code remain of active concern to larger organisations, although the inital lawsuit against Microsoft for training Github Copilot has been largely dismissed.

Coders’ Copilot code-copying copyright claims crumble against GitHub, Microsoft

A few devs versus the powerful forces of Redmond – who did you think was going to win?

In order to drive adoption, many vendors are offering to cover the cost of any potential lawsuits (IP Indemnity) to their higher tier enterprise customers, while other’s claim to only train their models on permissively licensed content.

Vendor Due Dilligence

Even when a company is able to navigate snakoil vendors, copycat startups and hype inflated product narratives, performing due dilligence on a vendor is a non-trivial task.

The AI startup drama that’s damaging Y Combinator’s reputation

A Y Combinator startup sparked a huge controversy almost immediately after launching. The bad PR eventually spread to YC itself.

The many startups providing cutting edge tooling in the space are only a few months old, have not yet demonstrated long term viability and may well disappear or acqui-hired long before vendor onboarding and pilot deployment is complete. And even well established companies such as Vercel or StackBlitz are facing massive infrastruture costs, legal risks and ruinous competition among each other and from open source offerings, making long term commitment to products a risky proposition.

Baidu CEO: 99% of AI companies won’t survive bubble burst

Baidu CEO Robin Li believes that only about one percent of AI companies will endure after the inevitable burst of the AI bubble. Li suggests this will…

Vendor due dilligence for AI tools adoption is therefore proving significantly more challenging than for traditional software:

- Even well understood concepts such as data localisation and compliance have been rebranded with new terminology (“Sovereign AI”).

- The technology’s frontier nature, complex technical stack and black-box nature makes it incredibly difficulty to accurately assess risks.

- In progress regulation and broad overlap with existing regulation regimes in data protection, copyright, intellectual property, personality rights, privacy, labor and competition law create a highly complex regulatory environment.

- Due to consumer first focus for most vendors, enterprise level features such as SSO, Audit Logs, RBAC and other security and compliance features are often missing or not fully baked.

All this is exacerbated by the serious lack of trained experts across, legal, compliance and technical teams in most companies.

Far from Plug and Play

Generative AI software is associated with the idea of easy, chat-bot like interfaces and the promise of driving productivity gains by democratizing access to powerful technology by lay people. Unfortunately, this promise is more sci-fi than reality, especially for coding tools.

We find, contrary to some published studies, that the primary beneficiaries of AI assistance tools are highly experienced developers who are able to instruct these models effectively and have the ability to evaluate the results for performance, correctness and security.

Risk of increased tech debt: Handing the technology to junior developers at scale risks facing a rapidly increasing codebase full of subtle bugs, tech debt and security issues to be remediated by experienced developers.

Understanding of LLM fundamentals, limitations and best practices is critical for effective use of these tools safely. Unfortunately, known truth in this area are rapidly evolving and last year’s best practices quickly become today’s anti-patterns. With much of the technology written on the frontier, this creates significant challenges for training and upskilling efforts as training providers lack access to the highly compeitive talent currently engaged in applied AI work. Free courses, as offered by many large AI companies are a mixed bag as well, focusing primarily on driving company adoption (“How to spend money on Bedrock / GCP / Azure”).

Given this constrained talent situation, we recommend companies to consider investing in bootstrapping their own centre of excellence, investing in existing talent to build a culture of sharing and experimentation.

Reminder Centre for AI Leadership Members can take advantage of our curated Prompting, LLM and AI Fundamentals channels to stay on top of changing best practices, state of the art research and fundamentals.

Part 2: The AI Coding Tool Landscape

Below is a temporary snapshot of the coding tool and assistant landscape as of October 2024. Due to the rapid changes, divergent feature sets, pricing tier differences and complexity of products, we are not providing a qualitative assessment of the tools and strongly suggest due dilligence on the part of the reader.

From the product variation listed below, it is evident that the product landscape is highly dynamic - no product has all the features and it is impossible to declare a one-size-fits-all winner.

Instead, we recommend that companies perform a detailed analysis of their specific needs and constraints, before selecting and deploying AI coding tools across their organization. Our own recommendations on how to approach this problem are found in Part 3 below.

LLM Coding Models and Chatbots

The most basic form of AI coding assistance is the direct use of LLM models and chatbots such as ChatGPT, Claude or Gemini, many of which have been upgraded with basic developer experience tooling, such as Claude’s Artifacts, ChatGPT’s Code Interpreter and Canvas.

These tools can go surprisingly far. For example, building a simple web application can be done in a few minutes with the right prompt, for example

Create me a Singapore Income Tax Calculator using shadcn/ui and tailwind, using the following pdfs from Singapore’s Inland Revenue Authority as reference. Add a few charts and tables to make it interesting/

While this is great for quick prototypes and foreshadows an interesing, post app future, once the code things start spanning over multiple files and the model’s context window is exceeded, copying and pasting code from the model’s output to the IDE becomes a chore. While claude does offer a project feature to synchronize files, most of it is locked behind the expensive premium tier and most companies will not be thrilled at synching their codebase into the cloud this way.

Claude

State of the art LLM Chatbot with vision, document processing and advanced coding capabilities,

Flagship Feature(s)

Artifacts allow real time preview of web components and code

Feature Matrix

- Python Sandbox

- Backend Server

- Web Component Preview

Hosting

Underlying Model

Claude-3.5-Sonnet

ChatGPT

LLM Chatbot with vision, audio, image generation, tool calling and and python sandbox (code interpreter)

Flagship Feature(s)

Powerful code interpreter able to run python code, and preview of web components (canvas)

Feature Matrix

- Python Sandbox

- Backend Server

- Web Component Preview

Hosting

Underlying Model

GPT-4o

Qwen-Coder-2.5

State of the art Chinese LLM with advanced coding capabilities (requires an LLM runtime like ollama)

Flagship Feature(s)

Bare-bones open model, running on Ollama and other open source LLM runtimes

Feature Matrix

- Python Sandbox

- Backend Server

- Web Component Preview

Hosting

Underlying Model

Qwen-Coder-2.5

A word about OpenAi’s o1 model

OpenAI’s o1 model is a powerful generalist model using prompt augmentation techniques to create high quality output, including code.

While not a good fit for general purpose coding assistance (due to various limitations and inability to import existing code at scale), it is not a great fit for general purpose coding assistance. It is however one of the strongest models for supporting experienced engineers in exploring, scoping and developing software architecture and providing initial implementation guidance.

Due to high costs and the required experience to operate this model effectively, we only recommend this model for experienced developers.

Specialist Chatbots

Beyond generalist chatbots, many startups have emerged focusing on specific use cases`, such as v0.dev and frontend.ai for web component creation or postgres.new for database schema generation.

V0.dev creating and previewing a react-component

These tools can provide localized productivity gains for specialized functions (such as front-end developers and designers), but their long term viability against generalist models is unclear and they primarily exist in a niche market created by a lack of focus on developer experience and special use cases by mainstream generalist models.

Where pure chatbots stumble is when moving from pure creation to editing, debugging and testing. Creating a self contained web component with Claude is simple enough, but since editing in the browser is not supported and using inference to edit code is awkward. This is where the more advanced tools start to shine.

Database.build

Formerly postgres.new, uses a chat and visual eidtor to generate SQL database schemas and queries

Flagship Feature(s)

Powerful visual editor, ability to connect to external databases

Feature Matrix

- In Browser Databases

- External Databases

- Create Data Model

- Import / Edit Data Model

- Execute SQL

Hosting

Underlying Model

ChatGPT-4o

v0.dev

Specialist UI Frontend Chatbot creating react components, specificallyoptimized for nextjs apps

Flagship Feature(s)

Strong alignment with next-js framework by Vercel

Feature Matrix

- Project Management

- Package Manager

- Backend Server

- Web Component Preview

Hosting

Underlying Model

ChatGPT-4o

IDE Plugins, AutoComplete and Code Assist

As IDE choice is a quasi religious topic for many software engineers, the majority of AI Coding tool developers resort to making their products available as plugins for the most common IDEs such as Visual Studio Code, Visual Studio, Jetbrains, etc. This avoids the massive friction of forcing users to switch, learn new tools and change workflows.

Moving beyond chatbots, these plugins enable developers to operate effectively on larger codebases, edit code manually and benefit from the context aware autocomplete and code suggestion that these tools offer. The sophistication of these plugins varies widely from product to product and price tier to price tier. While some product, like the original GitHub Copilot, primarily offer advanced, context aware autocomplete and code suggestion, others, like TabNine, add AI automation across the full lifecycle or application development, from planning.

GitHub Copilot

AI driven intellisense, code completion and pair programming. Most advanced features are available only on the expensive enterprise plan

Flagship Feature(s)

Non intrusive autocomplete with optional AI chat.

Feature Matrix

- Training Exclusion

- Code Autocomplete

- Security Scanning

- Test Creation

- Debugging

- Documentation

- CLI Completion

- Code Explanation

- IP Indemnity

Hosting

Underlying Model

OpenAI Codex

Tabnine

AI privacy focused plugin supporting many popular IDEs, offering full stack AI assistance from planning, coding, testing and deployment.

Flagship Feature(s)

Strong intellectual property, privacy and data protection assurances

Feature Matrix

- Training Exclusion

- Code Autocomplete

- Security Scanning

- Test Creation

- Debugging

- Documentation

- CLI Completion

- Code Explanation

- IP Indemnity

Hosting

Underlying Model

Custom model

Amazon Q Developer

Provides inline code suggestions, vulnerability scanning, and chat in popular integrated development environments (IDEs). Formerly 'Amazon Code Whisperer'

Flagship Feature(s)

Legacy Code Updating and Refactoring (Java)

Feature Matrix

- Training Exclusion

- Code Autocomplete

- Security Scanning

- Test Creation

- CLI Completion

- Debugging

- Code Explanation

- IP Indemnity

Hosting

Underlying Model

Custom Model

Codeium

AI driven intellisense, code completion and pair programming. Most advanced features are available only on the expensive enterprise plan

Flagship Feature(s)

Non intrusive autocomplete with optional AI chat.

Feature Matrix

- Training Exclusion

- Code Autocomplete

- Security Scanning

- Test Creation

- Debugging

- Documentation

- CLI Completion

- Code Explanation

- IP Indemnity

Hosting

Underlying Model

ChatGPT-4o, Claude-3.5-Sonnet

Google Gemini Code

IDE Plugin for many poplar IDEs, supporting more than 20 programming languages with advanced features like cited sources and code transformation actions.

Flagship Feature(s)

Massive 1M token context window enables extensive code understanding, customisation and generation. Firebase integration.

Feature Matrix

- Training Exclusion

- Code Autocomplete

- Security Scanning

- Test Creation

- Debugging

- Documentation

- CLI Completion

- Code Explanation

- IP Indemnity

Hosting

Underlying Model

Google Gemni 1.5 Pro

GitHub Enterprise

GitHub Copilot with deeper enterprise and knowlege based integration, web search,

Flagship Feature(s)

Full integration with github projects (issues, pull requests, etc)

Feature Matrix

- Training Exclusion

- Code Autocomplete

- Security Scanning

- Test Creation

- Debugging

- Documentation

- CLI Completion

- Code Explanation

- IP Indemnity

Hosting

Underlying Model

OpenAI Codex

Continue

One of the earlier IDE Plugin for VSCode and Jetbrains offering and integrated AI chatbot for coding supporting a variety of model providers

Flagship Feature(s)

Multi provider support.

Feature Matrix

- Training Exclusion

- Code Autocomplete

- Security Scanning

- Test Creation

- Debugging

- Documentation

- CLI Completion

- Code Explanation

- IP Indemnity

Hosting

Underlying Model

User Selected Model

New IDEs and Code Editors

Going a step beyond visual studio plugins, new IDEs and code editors promise integration with issue management, source code management systems, continuous integration and testing systems as key differentiators.

In practice, there’s currently two different approaches: Some of these tools are simple “forks” of existing tools, primarily Visual Studio Code, with a handful of additional AI powered features, while others are deploying custom web based solutions using novel technologies like webcontainers to automate deployment, at the expense of the more sophisticated developer experience of full desktop IDEs.

Replit’s selling points include a fully portable experience enabling development and deployment from any device, including mobile.

Bolt, for example, shines in time-to-production for small to medium apps, but once a certain complexity is reached, the lack of a full desktop IDE experience makes it difficult to use and eventually requires downloading and importing the codebase into a full IDE to continue development.

Cursor

Custom fork of VSCode with integrated AI chatbot for coding supporting code lifecycle operations through a variety of models.

Flagship Feature(s)

Sophisticated, diff based workflow for code editing, manual context management. Private Mode with SOC2

Feature Matrix

- Training Exclusion

- Code Autocomplete

- Security Scanning

- Test Creation

- Debugging

- Documentation

- CLI Completion

- Code Explanation

- IP Indemnity

Hosting

Underlying Model

OpenAI Codex

Bolt

Web IDE with integrated AI chatbot for creating and deployingfully featured frontend apps in many major frameworks like nextjs, astro

Flagship Feature(s)

AI can operate the development entire environment including the filesystem, backend server, package manager, terminal, and browser console.

Feature Matrix

- Training Exclusion

- Code Autocomplete

- Security Scanning

- Test Creation

- Debugging

- Documentation

- CLI Completion

- Code Explanation

- IP Indemnity

Hosting

Underlying Model

ChatGPT-4o, Claude-3.5-Sonnet

Repl.it

Replit is a web based, AI-powered software development & deployment platform for building, sharing, and shipping software fast.

Flagship Feature(s)

Web Container based IDE accessible from mobile, tablet and destop with collaboration features, focus on Enterprise features. SOC2 certified

Feature Matrix

- Training Exclusion

- Code Autocomplete

- Security Scanning

- Test Creation

- Debugging

- Documentation

- CLI Completion

- Code Explanation

- IP Indemnity

Hosting

Underlying Model

User Selected Model

This class of tools is among the most advanced in terms of developer experience, but companies may struggle to get developers to adopt them. IDE choice is a contentious topic for developers and even large tech companies avoid mandating specific products across their developer base for that reason. For products like Cursor, there is another problem: While a well funded startup may be able to build on top of Micrsoft’s VS Code for a while, they are also in direct competiton with Microsoft for AI users and it is unclear just how long Microsoft may tolerate this situation before using their massive resources, Github and VSCode ownership to start seriously competing.

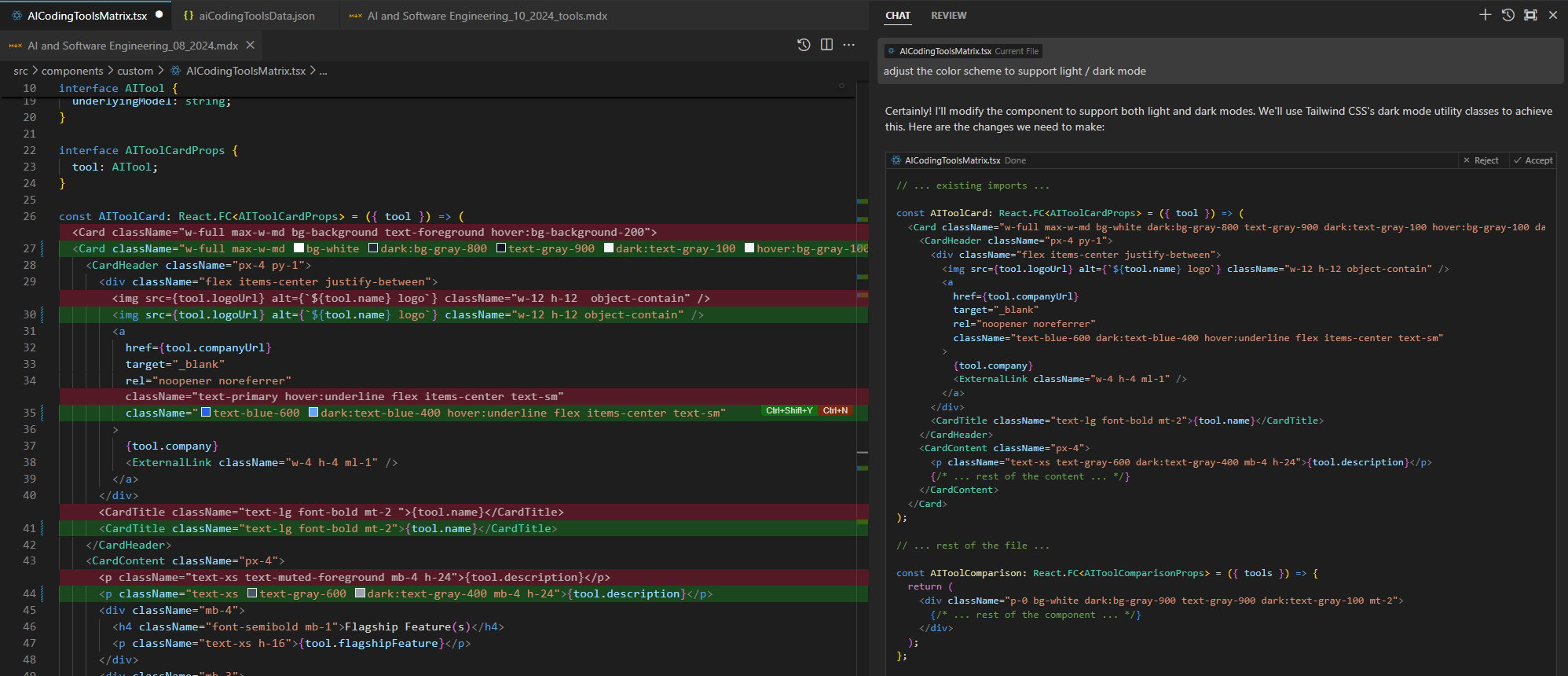

Cursor removes unnecessary cut and paste with a sophisticated, AI powered ‘diffing’ model, allowing the user to accept or reject AI suggested changes directly in code.

Agent Based Systems

Agent based systems (like “Devin”) promise full automation of software development tasks, from planning to coding to testing to deployment. Unfortunately, that’s all they do at the moment: promise, to the point of investor fraud.

We specifically leave out “agents” and agent based tooling from our analysis, as they continue a marketing gimick more than real products. Compounding error on the low reliability of the brain dooms these products to invariably fail for all but the most trivial use cases at this point and fundamental breakthroughs are needed before they are serious contenders.

No Code Tools

Another category we are not handling in our report is “No code tools”, including powerful design tooling software such as Figma and Canva moving into the code generation space, promising designers to take over the coding role. While for some organisations, with a serious lack of engineers, this may well be a necessary option to consider, for most companies the drawbacks of handing over control of their codebase to non-engineers outweigh any possible productivity gains.

C4AIL Recommendations

While large tech companies are teasing tantalizing internal productivity gains from AI coding tools, most other companies lack the resources (internal AI experts, expert developers, product teams, compute and training resources) and agility to act on these opportunities during the current phase of rapid innovation and capability development.

Due to the extensively discussed risk factors, rapid progress and unstable product landscape, we currently recommend companies to not roll out AI coding tools across their entire developer base.

Instead, we recommend organisations to

- Build competence and situational awareness of the rapidly changing landscape.

- Establish clear risk understanding, governance and policy frameworks.

- Invest in fundamental training and competence around the technology for leadership and key technical contributors.

- Foster a culture of hands on exploration and sharing by experienced developers and technical leaders using small scale pilots and hackathon formats.

before considering a broader rollout. Chances are that products will continue to improve rapidly during the next 6-12 months, leading to easier deployment, better enterprise integrations and more clarity on legal, compiance and sustainability issues.

Based on our experience and that of our clients, we recommend that companies take the following approach:

-

Calibrate expectations: While AI coding tools have the potential to deliver significant productivity gains, they are not a silver bullet and are not a substitute for experienced developers at this time. Understanding the real world capabilities and limitations of these tools beyond the shiny advertisement material from AI vendors is critical.

Understanding how the technology affects developer productivity is critical. Beyond saving time on tasks like writing tests, significant gains are made by reducing context switches and distractions associated with documentation searches and research (a benefit only effective while the underlying model is relatively current).

Another well documented effect of AI coding tools is that they prevent learning for less experienced developers. The act of writing code from scratch is a critical part of a developer’s learning journey and replacing it with AI synthesis has been shown to have a negative impact on developer skills and growth.

Finally, companies should anticipate skepticism, rejection and fear from their developer base. Many softwawre developers find the act of writing code from scratch to be creative and fulfilling and are understandably wary of AI synthesis replacing it. Change management, acknowledgement of risks and concerns and nuanced messaging are as much as key to successful adoption as the technology itself.

-

Align on expected business outcomes: Before starting a pilot, companies should agree on what they hope to achieve. Are they looking to increase developer productivity, improve code quality, reduce engineering time to market, improve developer satisfaction and retention, or something else?

Establishing counter metrics and markers for long term degradation of developer productivity, code quality and security posture is equally important!

-

Experienced Developers First: In the current state of AI tooling, experienced developers are required to optimally instruct models and validate the outputs of the technology. While we can expect this to change in the years to come, it currently takes an expert developer to produce quality output. Handing the technology to junior developers at scale risks facing a rapidly increasing codebase full of subtle bugs, tech debt and security issues to be remediated by experienced developers.

-

Adopt Shallow, Focus on Low Hanging Fruit: In thisrapidly evolving ecosystem, products depreciate rapidly in value and are likely overtaken by better options on an almost monthly basis, making large scale change initiatives highly risky. Companies should prioritize quick wins (such as frontend component generation) that have a high level of maturity, limited dependencies and are easy to adopt over big projects with significant change mangement.

Focusing on developer activites that have been automated to a high degree of accuracy and convenience, such as React/Frontend component generation, static website development, etc. is a good starting point for most companies. With limited training and access to the right tools, even junior developers can benefit from these tools, cutting tasks previously measured in days to minutes.

One of the serious benefits of the technology is the ability to accelerate proof of concepts and prototype development, especially when senior developers are involved. Taking advantage of the significantly reduced time to concept can be a game changer for companies. What can be achieved in a hackathon format, supported by AI tooling in a few days, is well beyond what was possible with traditional tools. Companies should consider taking advanage of this newfound agility, especially in the context of rolling out AI, to create positive momentum and de-risk projects.

-

Provide Training: Foundational understanding of LLMs, coding models and their capabilities and limitations is key to successful operation of AI coding tools. Without understanding of concepts like context window, effective prompting patterns and exposure to common limitations and risk factors (such as prompt injection and hallucinations), developers are unable be able to use these tools effectively. Awareness of data egress, security, privacy, IP and other compliance risk requires both policy development and training. C4AIL offers specific training courses and material for this purpose.

We also recommend training around new Generative AI capabilities such as vision, embeddings and transcription models, as these have made significant strides in the last months and are increasingly replacing legacy approaches like OCR and other data extraction techniques. Creating awareness of these changes, how to access and securely integrate these new features without major incident is key to successful adoption.

But Which Tool To Use?

As discussed, the optimal choice of tool is not trivial and requires a detailed analysis of the specific needs and constraints of a company. However, as a general approach and based on corporate needs and policy constraints, we recommend:

-

While relatively basic, Github Copilot is useful for developers of all levels due to it’s non intrusive auto-complete features and requires only minimal training. One risk factor to consider here however is that these products are not static. New features are furiously tested and deployed on almost all of them on a monthly basis, making it difficult to predict what will be available in the future and at which point lack of training and experience may start creating serious issues.

-

Claude.ai is a great choice for synthesizing high quality frontend components for standard frameworks and design systems such as react, tailwind, astro, shadcn-ui and nextjs. Writing react components by hand is not something many people will be doing in the future anymore. Claude’s project tooling bridge some of the gap to a full developer experience as well, but requires more training to use effectively.

-

Bolt.new is a phenomenal platform for quickly prototyping and testing new ideas. It’s fully integrated development environment makes for excellent developer velocity and minimizes boilerplate and setup time, reducing creation and production deployment of cutting edge static websites, web-apps and dashboards from weeks to minutes.

-

Database.build is a good, all around database planning tool without locking the user into specific dependencies and is recommended for database development. There’s few reasons not to use it.

-

Cursor.sh is probably the currently most sophisticated development environment for senior developers on the market at the moment but also one of the most involved one, requiring training and careful assessment of potential policy challenges.

-

For Java specific development, Amazon’s Q Code Assistant is likely a good choice for senior developers, but companies may also want to keep an eye on IBM’s Watson X Code Assistant currently in developer preview due to its heavy focus on Java development.

-

For project scoping, research and architecture planning, experienced developers with deep subject matter expertise will find OpenAI’s o1 model to be a powerful tool. While OpenAI’s overselling the “reasoning” capabilities of the model, it is extremely powerful at synthesizing complex technical documentation, code and architecture when guided by an experienced developer.

-

For senior engineers in companies with extensive data protection, compliance and security policies, TabNine offers a good balance between powerful features and a strong focus on compliance and security.

About the Author

Georg Zoeller is a Co-founder of Centre for AI Leadership and a former Facebook/Meta Business Engineering Director. He has decades of experience working on the frontlines of technology and was instrumental in building and evolving Facebook’s Partner Engineering and Solution Architecture roles.